CrowdStrike Lesson - QA is Critical

Stronger QA policies and more cautious rollouts could have prevented global outage.

We may think of QA (Quality Assurance) roles as only existing in software development. But testing is a critical part of any robust infrastructure, whether you're producing software or deploying it. Finding a QA role as an entry-level job can be a logical first step towards a cybersecurity career. It teaches you to always plan for "what could possibly go wrong?"

The recent outage caused by a faulty CrowdStrike update gives Cybersecurity instructors and students an interesting case study on the importance of Quality Assurance, or “QA”.

What is CrowdStrike?

CrowdStrike is a cybersecurity product that many large corporations use. Its purpose is to watch for suspicious activity on computing devices and intervene accordingly. But it's much more complex and powerful than your average anti-virus software. With a global install-base (roughly 8.5 million), CrowdStrike is able to “crowd source” cyber intelligence from millions of machines. Computers that use CrowdStrike software are observing the behaviors and idiosyncrasies of malicious code and reporting these details back to CrowdStrike. This rich insight on the latest in attack techniques enables the company to produce sharply accurate and predictive software that stops malware before it begins.

CrowdStrike has been quite instrumental in detecting, tracking and uncovering some of today's most recent and pernicious malware campaigns and the threat actor groups behind them. Although this recent outage might tarnish their reputation for a little while, it's safe to say they've learned great lessons that the whole world can benefit from if we examine the details. Have a look at CrowdStrike's Remediation and Guidance Hub for more on these lessons and what they intend to do about it.

The above webpage goes deep into technical details, but the following is a pared down summary.

How CrowdStrike Software Works

Their software machinery consists of the following parts:

A) The sensor software installed on computers is called Falcon. This is the main engine for malware detection. It includes on-sensor AI and machine learning models.

The Falcon software stack includes these additional pieces:

B) Sensor Content

C) Rapid Response Content update files

D) Template Types

E) Template Instances

F) Channel files

How a Bug Slipped through QA Testing

Sensor content always comes included with major releases of Falcon which undergo extensive testing before mass deployment to consumer systems. The version of Falcon affected by this outage is 7.11 which was released successfully in Feb 2024. Everything worked fine.

Thereafter, updates are made to Sensor Content by means of Rapid Response Content update files (C). These are also tested and validated before release for general consumption. In order to load and use new content, Falcon also requires the use of Template Types (D), Template Instances (E) and Channel files (F).

Falcon's Content Interpreter uses the Channel File to interpret Rapid Response Content. The Content Interpreter is designed to gracefully handle exceptions from potentially problematic content.

These are not new procedures for CrowdStrike's test and release cycles. It's been humming along this way for some time and has been safe and effective… until it wasn't.

What Happened This Time?

In February, Falcon 7.11 included a new Template Type to watch for abuses of Named Pipes. They called it the InterProcessCommunication (IPC) Template Type. It passed all of CrowdStrike's internal validation and stress testing, then was sent out for general consumption by the Falcon 7.11 install-base.

But the Template Type (in the consumer install base) was waiting for content updates to tell it about the latest in named pipe attack techniques.

Template instances are what deliver those updates to Falcon sensors. And they are delivered by means of Channel Files. Between March and April, a total of 4 Template Instances were tested and released to production. They performed fine.

But then two more Template Instances were released on July 19 and this is where the crash occurred. Both of these also passed the internal testing at CrowdStrike, but one of them had a bug that slipped through the Content Validator's scrutiny. Channel File 291, when received by the Falcon sensor and loaded into the Content Interpreter, caused an out-of-bounds memory read, triggering and exception, and causing Windows systems to crash.

The Falcon sensor acts like a root-kit in that it must load in the pre-boot environment. But, if it can't load, neither can Windows. So the system continually crashes and reboots as the Channel File keeps triggering this memory exception. The intervention is manual and, in many cases, requires a desk-side visit to each computer. So, recovery is time-consuming.

New Preventative Measures - A Lesson for the Classroom

Hard lessons have been learned. On CrowdStrike's Remediation and Guidance Hub, they've listed the following improvements to their QA practices. This is a good outline for important discussions within college classrooms.

Don't just teach how to build code, also teach how to test it and deploy it cautiously to production.

Coding classes should discuss these enhanced techniques and learn from one of the biggest QA lessons in history.

The new test-and-release strategy below is quoted directly from the CrowdStrike website.

"Software Resiliency and Testing

- Improve Rapid Response Content testing by using testing types such as:

- Local developer testing

- Content update and rollback testing

- Stress testing, fuzzing and fault injection

- Stability testing

- Content interface testing

- Add additional validation checks to the Content Validator for Rapid Response Content. A new check is in process to guard against this type of problematic content from being deployed in the future.

- Enhance existing error handling in the Content Interpreter.

Rapid Response Content Deployment

- Implement a staggered deployment strategy for Rapid Response Content in which updates are gradually deployed to larger portions of the sensor base, starting with a canary deployment.

- Improve monitoring for both sensor and system performance, collecting feedback during Rapid Response Content deployment to guide a phased rollout.

- Provide customers with greater control over the delivery of Rapid Response Content updates by allowing granular selection of when and where these updates are deployed.

- Provide content update details via release notes, which customers can subscribe to."

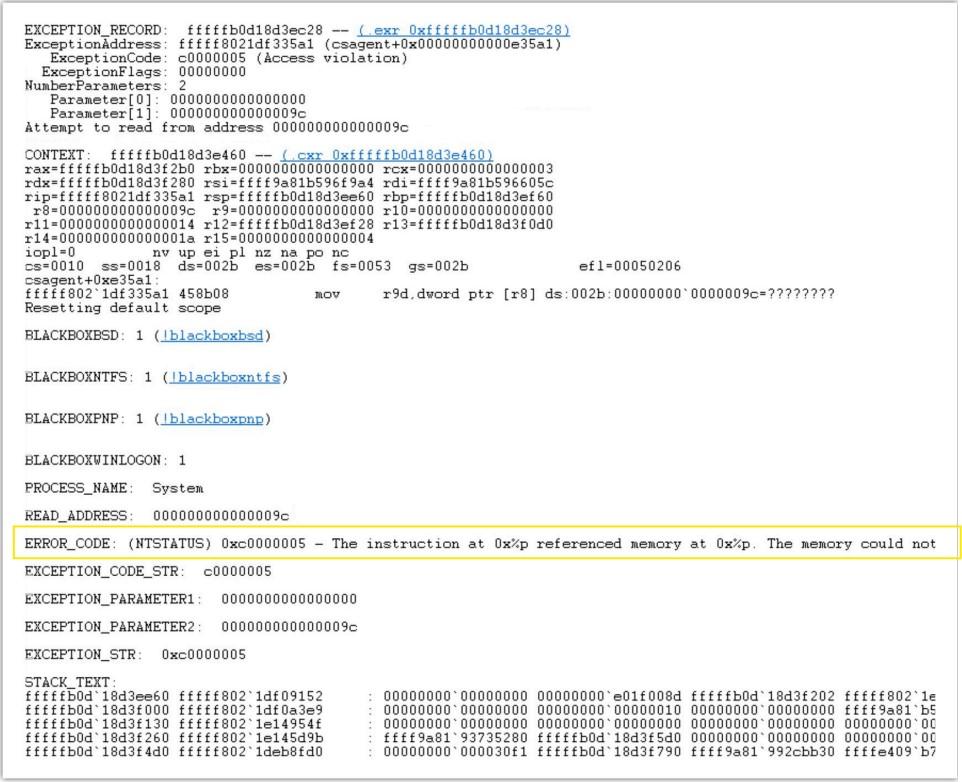

The simple memory exception that stalled the world. Taken from the Security Now podcast's transcript by Steve Gibson.

The simple memory exception that stalled the world. Taken from the Security Now podcast's transcript by Steve Gibson.